This blog summarises the general sizing and scaling principles of VMware vSAN on Oracle Cloud VMware Solution (OCVS) for the different type of OCVS clusters that are either Intel or AMD based to demonstrate sizing and scaling options for customers that want to migrate workloads to OCVS, extend their local VMware SDDC to an OCVS SDDC, use OCVS for Disaster Recovery purpose.

OCVS general overview:

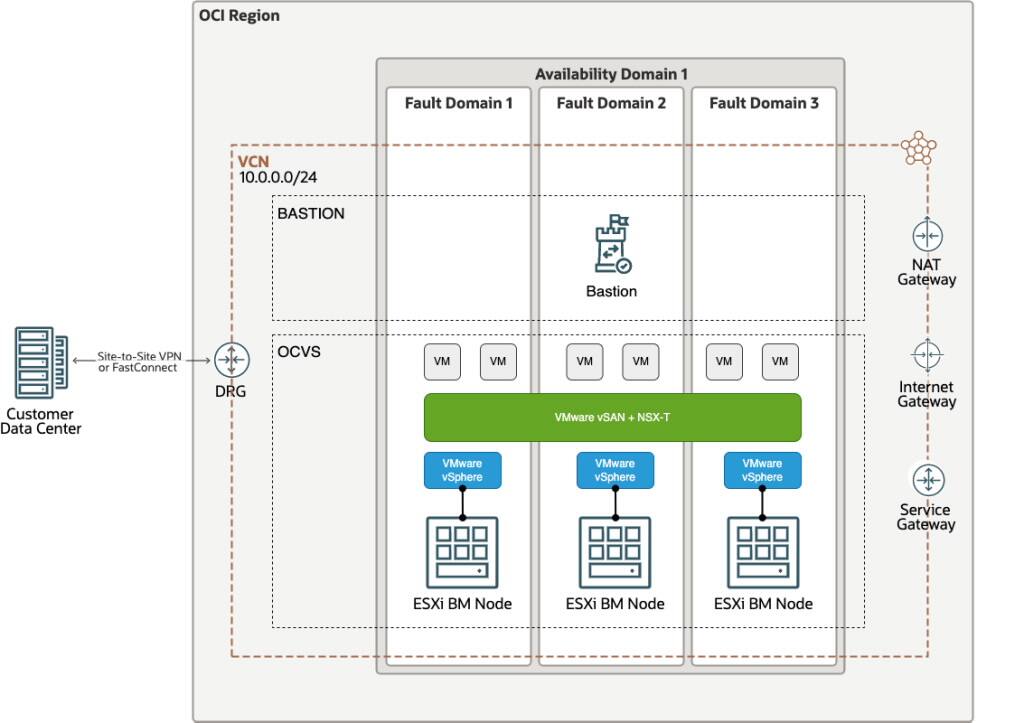

Oracle Cloud VMware Solution (OCVS) is a VMware Software Defined Data Center (SDDC) solution on Oracle Cloud Infrastructure (OCI) that can either be based on Intel or AMD Bare Metal Hosts and is based on the following VMware product stack:

- VMware vSphere Hypervisor (ESXi)

- VMware vCenter Server

- VMware vSAN

- VMware NSX-T

- VMware HCX – Advanced Edition (Enterprise Edition billed separately)

VMware vSAN is a software-defined, enterprise storage solution that supports hyper-converged infrastructure (HCI) systems. vSAN is fully integrated with VMware vSphere, as a distributed layer of software within the ESXi hypervisor.

vSAN aggregates local or direct-attached data storage devices, to create a single storage pool shared across all hosts in a vSAN cluster.

vSAN eliminates the need for external shared storage and simplifies storage configuration through Storage Policy-Based Management (SPBM). Using virtual machine (VM) storage policies, you can define storage requirements and capabilities.

This blog summarizes the general sizing and scaling principles of VMware vSAN on Oracle Cloud VMware Solution (OCVS) for the different type of OCVS clusters that are either Intel or AMD based to demonstrate sizing and scaling options for customers that want to migrate workloads to OCVS, extend their local VMware SDDC to an OCVS SDDC, use OCVS for Disaster Recovery purpose or simply start with a greenfield OCVS deployment to host legacy workloads, VMware Tanzu or VDI on OCVS.

OCVS Bare Metal Shapes:

The following shapes are supported for OCVS ESXi hosts:

| Processor: | OCI Shape: | CPU/Socket: | Cores/Socket: | Total Cores: | Memory: | Storage: |

| Intel | BM.DenseIO2.52 | 2 | 26 | 52 | 768 GB | 51,2 TB |

| AMD | BM.DenselO.E4.128 – 32 | 2 | 16 | 32 | 2048 GB | 54,4 TB |

| AMD | BM.DenselO.E4.128 – 64 | 2 | 32 | 64 | 2048 GB | 54,4 TB |

| AMD | BM.DenselO.E4.128 – 128 | 2 | 64 | 128 | 2048 GB | 54,4 TB |

Storage: NVMe SSD Storage (8 Disks per Host)

See Dense I/O Shapes for more detail.

OCVS Cluster minimum sizing:

The minimum supported sizing for a OCVS Cluster is always 3 Hosts as this is the minimum required host count for a vSAN Standard Cluster to support a RAID-1 configuration that can tolerate 1 host failure.

Intel based 3 host cluster:

- 156 Cores per Cluster

- 2304 GB Memory per Cluster

- 153 TB NVMe SSD per Cluster (BM RAW Storage)

- 122.36 TB vSAN

AMD based 3 host cluster:

- 96/192/384 Cores per Cluster

- 6144 GB Memory per Cluster

- 163 TB NVMe SSD per Cluster (BM RAW Storage)

- 129.9 TB vSAN

OCVS Cluster types:

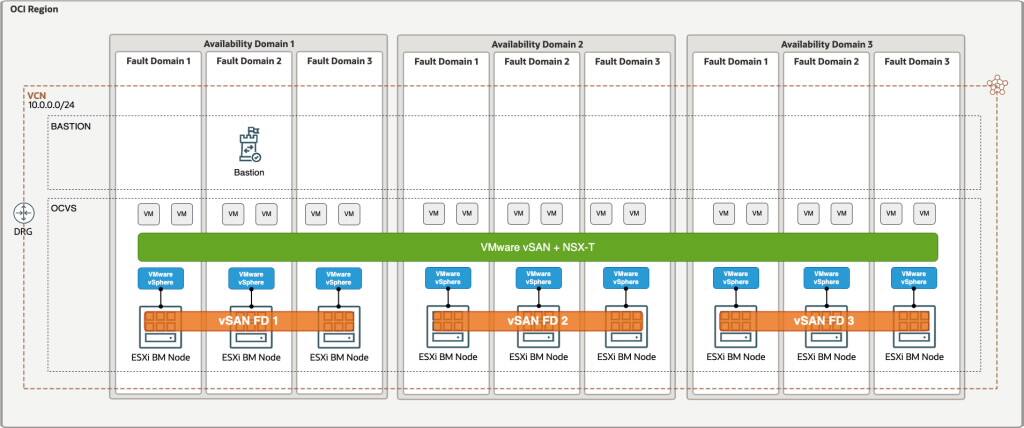

OCVS Clusters can either be Single AD Clusters which means that these types of clusters are only available in a single availability domain stretched across 3 fault domains or Multi AD Clusters that can be stretched across availability domains where the availability domains acts as a fault domain.

OCVS Single AD Cluster:

Single AD Clusters span across 3 OCI Fault Domains within an OCI Availability Domain and aggregate the ESXi hosts local storage into a vSAN Datastore. Each OCI Fault Domain acts as a vSAN Fault Domain.

OCVS Multi AD Cluster:

Multi AD Clusters can span across 3 OCI Availability Domains within an OCI Region and aggregate the ESXi hosts local storage into a vSAN Datastore. Each OCI Availability Domain acts as a Fault Domain building a OCVS Cluster across Availability Domains. This setup provides the maximum availability for a OCVS Cluster and its Virtual Machines.

OCVS vSAN sizing:

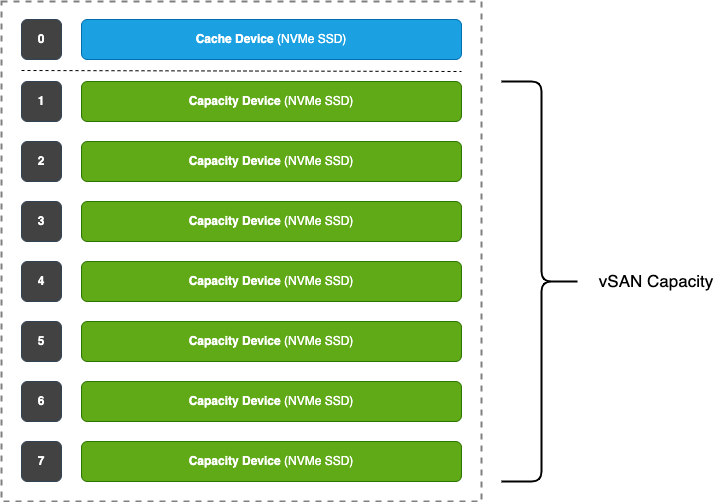

Each ESXi Host participating in a vSAN Cluster on OCVS is stacked with 8 NVMe SSD devices that represent a vSAN Disk Group. The Disk group represents the local aggregation of the storage resources made up of 1 Cache Device and 7 Capacity Devices. For OCVS there is only a single disk group because all cache and capacity devices are NVMe based that do not require additional storage controllers, this eliminates a single point of failure when it comes to vSAN Disk Group design.

The Capacity Devices represent the total amount of vSAN Storage a ESXi Host is presenting to the vSAN Cluster.

OCVS ESXi Host vSAN Raw Capacity:

OCVS Intel based ESXi hosts are configured with 8x 5.82 TB NVMe SSDs (1x NVMe SSD for vSAN Cache and 7x NVMe SSD for vSAN Capacity), this results into a ESXi Host vSAN Raw Capacity of 40,74 TB.

(Capacity Device Size * Number of Capacity Devices = ESXi vSAN Host Raw Capacity)

OCVS Cluster vSAN RAW Capacity:

The minimum supported configuration for an OCVS Cluster is 3 ESXi Hosts, this results in a total vSAN Raw Capacity of 122,22 TB for a 3 Host OCVS vSAN Cluster.

(ESXi Host vSAN Raw Capacity * Number of ESXi Hosts = Cluster vSAN Raw Capacity)

Capacity Dependencies:

The usable capacity of an OCVS vSAN Cluster depends not only on the number of ESXi Hosts that are provisioned to scale out the OCVS Cluster vSAN Raw Capacity, but there are also a few more factors to consider that have an impact on the sizing and scaling of a OCVS Cluster:

- Failure Tolerance Method (FTM)

- Failures to Tolerate (FTT)

- Host Reservation

- Deduplication & Compression

- Operations Reserve & Host rebuild reserve

- Virtual Machine sizing (vCPU & vMEM)

- VMware vSphere HA resources (vCPU & vMEM)

Failure Tolerance Method (FTM) & Failures to Tolerate (FTT):

The Failure Tolerance Method (FTM) and Failures to Tolerate (FTT) have an important role when you plan and size storage capacity for vSAN. Based on the availability requirements of a virtual machine, the setting might result in doubled vSAN capacity consumption or more.

- FTM = RAID Level of a vSAN Object

- FTT = Host failures a vSAN Cluster must withstand without degradation

RAID-1 (Mirroring) – Performance

- If the FTT is set to 0, the consumption is 1x. = (100GB VMDK = 100GB vSAN)

- If the FTT is set to 1, the consumption is 2x. = (100GB VMDK = 200GB vSAN)

- If the FTT is set to 2, the consumption is 3x. = (100GB VMDK = 300GB vSAN)

- If the FTT is set to 3, the consumption is 4x. = (100GB VMDK = 400GB vSAN)

RAID-5/6 (Erasure Coding) – Capacity

- If the FTT is set to 1, the consumption is 1.33x. = (100GB VMDK = 133GB vSAN)

- If the FTT is set to 2, the consumption is 1.50x. = (100GB VMDK = 150GB vSAN)

| RAID Configuration (FTM): | FTT: | Hosts Required: | FTT Calculation: | vSAN Used: | vSAN Free: |

| RAID-1 (Mirroring) | 1 | 3 | 2n+1 | 50% | 50% |

| RAID-5 (Erasure Coding) | 1 | 4 | 3+1 | 25% | 75% |

| RAID-1 (Mirroring) | 2 | 5 | 2n+1 | 67% | 33% |

| RAID-5/6 (Erasure Coding) | 2 | 6 | 4+2 | 33% | 67% |

| RAID-1 (Mirroring) | 3 | 7 | 2n+1 | 75% | 25% |

RAID-1 (Mirroring) vs RAID-5/6 (Erasure Coding)

- RAID-1 (Mirroring) in vSAN employs a 2n+1 host or fault domain algorithm, where n is the number of failures to tolerate.

- RAID-5/6 (Erasure Coding) in vSAN employs a 3+1 (RAID-5) or 4+2 (RAID-6) host or fault domain requirement, depending on 1 or 2 failures to tolerate respectively.

- RAID-5/6 (Erasure Coding) does not support 3 failures to tolerate, this setting is only available for RAID-1 (Performance).

Host Reservation:

Host reservation in vSAN generally reserves ESXi Hosts in a vSAN Cluster, this means that these hosts are participating in the vSAN Cluster and act as dedicated failover resources depending on the availability requirements of a OCVS Cluster when it comes to the event of a failure or maintenance operations. This is a optional setting that will bring higher availability and resiliency.

N+1 guarantees an additional host to satisfy the vSAN Cluster availability requirement in terms of a host failure or maintenance operations.

N+2 guarantees two additional hosts to satisfy the vSAN Cluster availability requirement to maintain a N+1 reservation at any point in time this means that there will be no degradation in performance or availability in case of a host failure or host maintenance.

Deduplication & Compression:

Deduplication and Compression is mostly suitable for highly de-dupable workloads like VDI Full Clones.

- On-disk format version 3.0 and later adds an extra overhead, typically no more than 1-2 percent capacity per device. Deduplication and compression with software checksum enabled require extra overhead of approximately 6.2 percent capacity per device.

Reduction ratios based are on VMware measurements that provide an indication on how deduplication & compression can save space on a vSAN cluster per workload type, but these ratios should always be adjusted to the desired workload reductions ratios measured in an on-premises environment as a reference value.

- Reduction ratio indications:

- General Purpose VMs = 1.5

- Databases = 2.0

- File Services = 1.5

- VDI Full Clone = 8

- VDI Instant Clone = 2

- VDI Linked Clone = 2.5

- Tanzu = 1.5

Operations Reserve & Host rebuild reserve:

Operations Reserve defines the capacity needed form vSAN to perform internal operations such as policy changes, rebalancing, and data movement.

vSAN provides the option to reserve the capacity in advance so that it has enough free space available to perform internal operations and to repair data back to compliance following a single host failure. By enabling reserve capacity in advance, vSAN prevents you from using the space to create workloads and intends to save the capacity available in a cluster. By default, the reserved capacity is disabled.

If there is enough free space in the vSAN cluster, you can enable the operations reserve and/or the host rebuild reserve.

- Operations reserve – Reserved space in the cluster for vSAN internal operations.

- Host rebuild reserve – Reserved space for vSAN to be able to repair in case of a single host failure.

Enabling Host rebuild reserve demarcates one hosts worth of capacity in the cluster. Host rebuild reserve works on the principle of N+1. For example, in a 4-node cluster of identical hardware configuration, Host Rebuild reserve would require 25% (1/4th) reserve capacity to ensure sufficient rebuild capacity. This shows that when a vSAN Cluster increases in size the host rebuild reserve is decreasing for example in an 8-node cluster of identical hardware configuration, Host Rebuild reserve would require 12,5% (1/8th).

Virtual Machine sizing (vCPU & vMEM):

As a OCVS vSAN Cluster scales horizontally the vSAN capacity is always scaling linear with the vCPU and vMEM resources which results in potential unused vCPU and vMEM resources compared to the provided vSAN storage and vice versa.

VMware vSphere HA resources (vCPU & vMEM):

VMware vSphere HA is an integral part of every vSphere deployment that provides the ability the reserve cluster capacity for vCPU and vMEM resources in case a host must go into maintenance mode or fails due to a hardware error to guarantee that all VM in the cluster are provided with sufficient vCPU and vMEM resources at all time, typically this HA reserve is calculated on a percentage based on the number of hosts in a cluster. A 3-node cluster of identical hardware configuration, HA reserve would require 33% (1/3rd) reserve capacity to ensure sufficient HA capacity. In an 8-node cluster of identical hardware configuration, HA reserve would require 12,5% (1/8th) reserve capacity to ensure sufficient rebuild capacity. The examples show the HA reservation for a N+1 scenario for N+2 the reservation will double in size.

OCVS vSAN scaling:

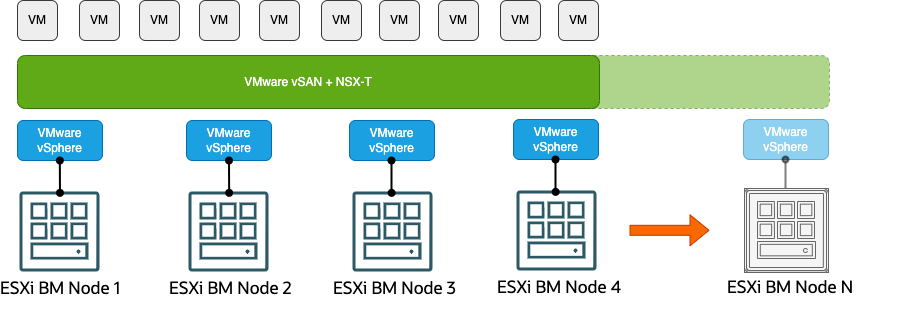

Unlike many on-premises vSAN Clusters, OCVS is scale-out only solution, this means it is not possible to scale-up a OCVS ESXi Host by adding disks to expand disk groups or create additional disk groups. OCVS can only scale on the vSAN storage level by adding hosts to the OCVS Cluster also known as horizontal scaling. A OCVS cluster can scale from 3 hosts to a maximum of 64 hosts in a single SDDC.

Oracle recommends VMware SDDCs deployed across availability domains within a region do not exceed a maximum of 16 ESXi hosts

Depending on the chosen OCVS VM Shape (Intel or AMD) the scaling of a OCVS Cluster is dictated by the required vCPU, vMEM and Storage requirements (FTM and FTT) as well as potential storage saving techniques line deduplication & compression depending on the type of workload.

Example:

The table below shows the raw capacity of on 8 Node OCVS vSAN Cluster with a CPU to vCPU ratio of 1:2 without applying any storage saving techniques like deduplication & compression and without the operations and host rebuild reserve. The actual usable vSAN capacity is dictated by the FTM and FTT settings (Storage Policy) per Virtual Machine object.

| Nodes: | Processor Type: | Sockets: | Cores per Socket: | Physical Cores: | Logical Cores: | Memory: | Storage: | vCPU Total: | vMEM Total: | vSAN Total: |

| 8 | Intel | 2 | 26 | 52 | 104 | 768 GB | 40,74 TB | 832 | 6144 GB | 325 TB |

| 8 | AMD | 2 | 16 | 32 | 64 | 2048 GB | 43,3 TB | 512 | 16384 GB | 346 TB |

| 8 | AMD | 2 | 32 | 64 | 128 | 2048 GB | 43,3 TB | 1024 | 16384 GB | 346 TB |

| 8 | AMD | 2 | 64 | 128 | 256 | 2048 GB | 43,3 TB | 2048 | 16384 GB | 346 TB |

See Dense I/O Shapes for more detail.

VMware vSAN sizer:

A very useful and powerful tool to verify the sizing and scaling for VMware vSAN clusters is the VMware vSAN Sizer, which brings the ability to calculate a sizing for mixed workload clusters with different FTM & FTT levels considering Virtual Machine CPU and Memory requirements as well as N+1 or N+2 configurations, deduplication and compression, CPU to vCPU ratios and Operations Reserve & Host rebuild reserve.

Conclusion:

This blog summarizes the general sizing and scaling principles of VMware vSAN on Oracle Cloud VMware Solution (OCVS) for the different type of OCVS clusters that are either Intel or AMD based to demonstrate sizing and scaling options for customers that want to migrate workloads to OCVS, extend their local VMware SDDC to an OCVS SDDC, use OCVS for Disaster Recovery purpose or simply start with a greenfield OCVS deployment to host legacy workloads, VMware Tanzu or VDI on OCVS.

Leave a comment